1. Amdahl’s law

- speedup(f, n) = $\frac{1}{(1-f) + \frac{f}{n}}$

- f: fraction of program that is parallelizable

- n: parallel processors

1.1. resource limits symmetric version

Suppose the total resource is fixed, and each processor uses the same amount of resources.

- speedup(f, n, r) = $\frac{1}{\frac{1-f}{perf(r)} + \frac{f}{perf(r) * \frac{n}{r}}}$

- f: fraction of program that is parallelizable

- n: total processing resources

- r: resources dedicated to each processing core

- BCEs: Generic unit of cost

- area, power, etc

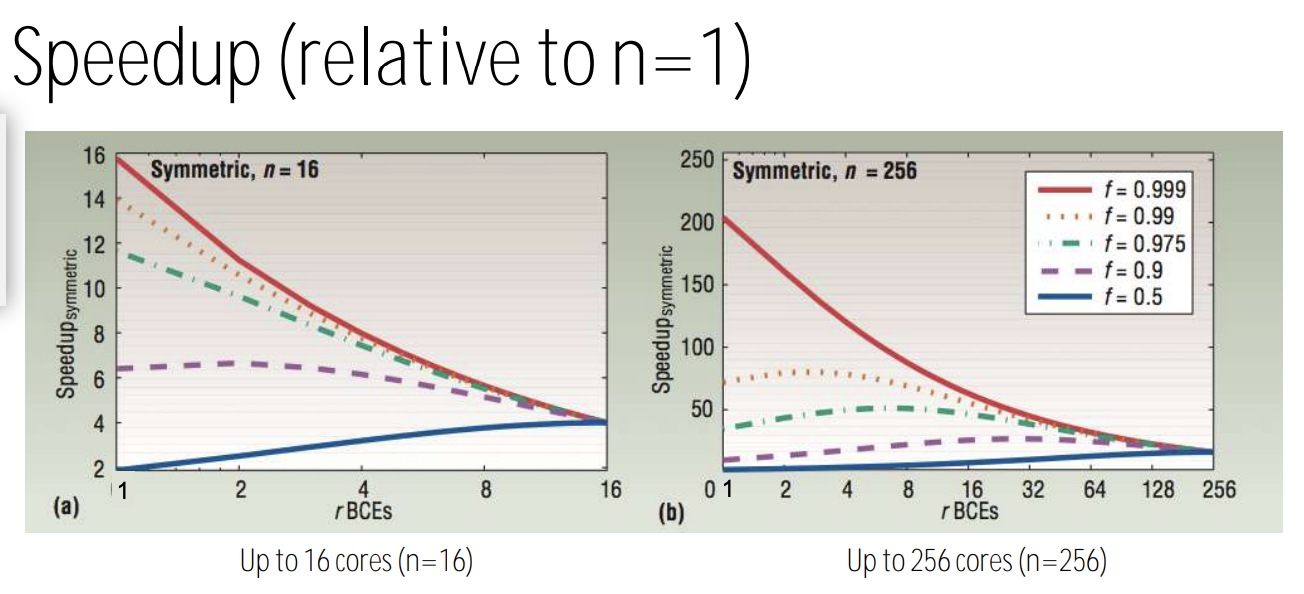

- x-axis: resources per core

- right: fewer fatter cores

- left: many small cores

- perf(r) modeled as $\sqrt{r}$

- graph:

- f = 0.5: for workload with small parallelism ability, less but fatter core wins

- f = 0.999: for workload with huge parallelism ability, more but thinner core wins

- optimal point: peak point

- analysis 1: fraction f should be as high as possible

- analysis 2: having r > 1 BCEs per core can be beneficial (for n = 256, f = 0.975, maximum speedup at 7.1 BCEs per core)

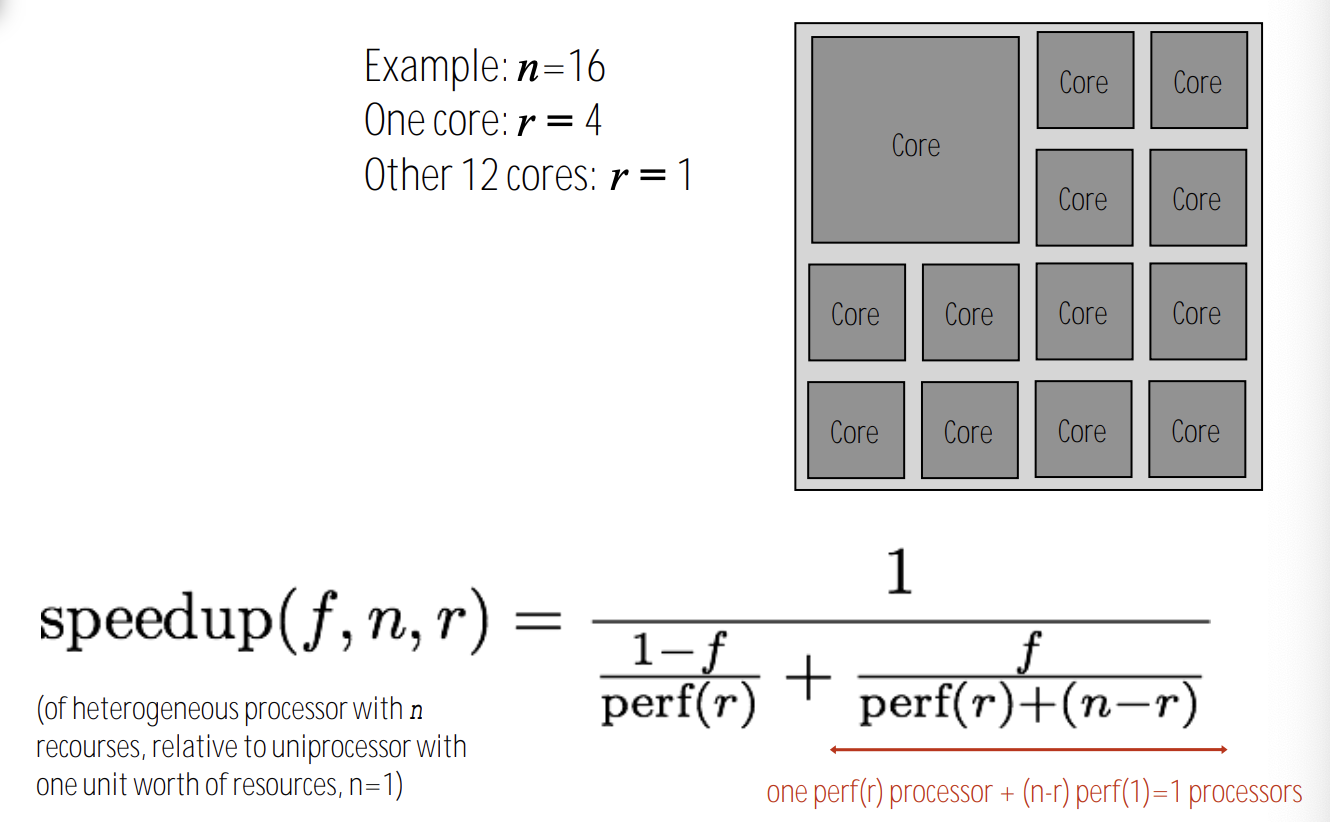

1.2. asymmetric set of processing cores

- 上图给出了n资源异构处理器相对于单位资源单处理器的加速比

- 1-f串行部分通过perf(r)执行,f可并行部分通过(n-r)perf(1) + pref(r)执行

- perf(r)相当于拥有r资源的单个核相对于单位资源单个核的性能

- 大小核架构不仅最大加速比提升了

- 而且peak加速比的位置偏移了

- 并且不同workload的加速比峰值位置相对更加集中

1.3. heterogeneous processing

real world

| 并行 | 串行 |

|---|---|

| components that can be widely parallelized | components that are difficult to parallelize |

| components that are amenable to wide SIMD execution | components are not (divergent control flow) |

| components with predictable data access | components with unpredictable access, but might cache well |

Idea: the most efficient processor is a heterogeneous mixture of resources (“use the most efficient tool for the job”)

real world heterogeneous

choosing the right tool for the job

- challenges of heterogeneity

- use preferred processor for each task

- what is the right mixture of resources to meet performance, cost, and energy goals?

- implication: increased pressure to understand workloads accurately at chip design time

- use preferred processor for each task

- pitfalls of heterogeneous designs

- how to map programs onto a heterogeneous collection of resources?

- pick the right tool for the job: software portability nightmare

- reducing energy consumption idea 1: use specialized processing

- reducing energy consumption idea 2: move less data

note: traditional rule of thumb in “good system design” is to design simple, general-purpose components. not the case with emerging processing systems

note: today want collection of components that meet perf requirement AND minimize energy use

note challenge of using these resources effectively is pushed up to the programmer